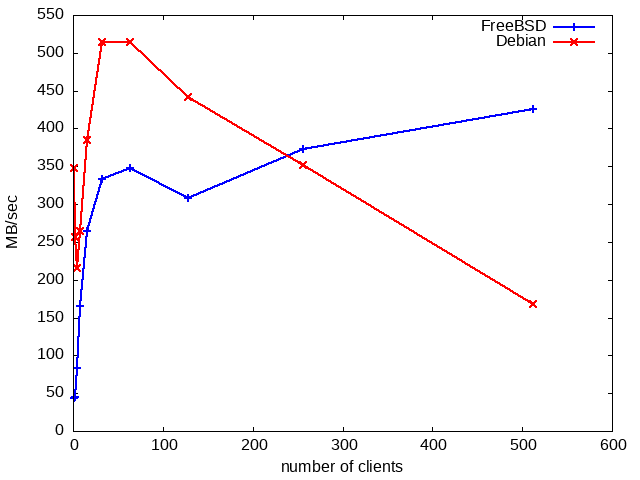

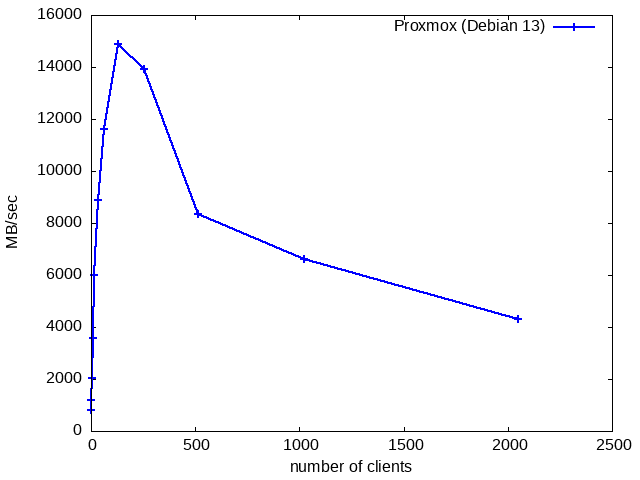

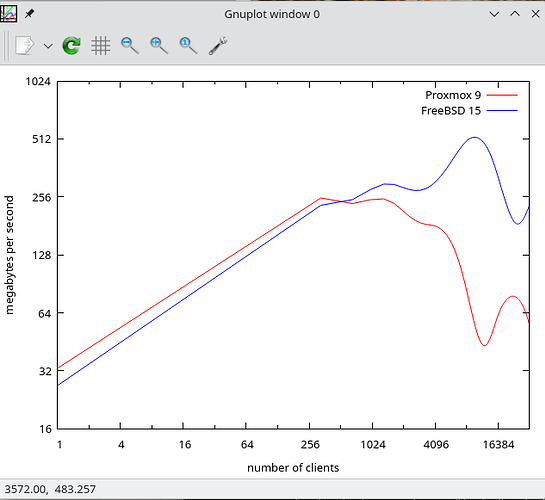

Great topic! Hm. It’s likely because FreeBSD often runs with a leaner default stack, so I/O passes through fewer layers.

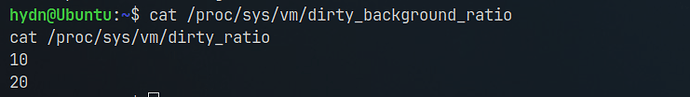

Linux, on the other hand, offers more flexibility and features, which adds overhead: The I/O scheduler, blk-mq, cgroups, dm layers, LVM, md, and filesystem journaling, and you can end up with queues and locking a lot easier.

It’s still very fast! But yes, Linux can be slower than FreeBSD out‑of‑the‑box in some storage benchmarks and configurations.

As far as closing the gap, it’s a bit outdated now and only loosely related, but some of the tips may help:

In addition, I would say make sure to set the CPU governor to a max performance mode. If the CPU is sitting at 800 MHz because the governor downclocked it, storage I/O operations may be slower. It really depends on the exact hardware, but low‑frequency/powersave modes can absolutely hurt I/O latency and throughput compared to FreeBSD.

FreeBSD will tend to sit at or near a high non‑turbo frequency unless you explicitly enable powerd[xx] or tune power settings.

For NVMe SSDs, the none I/O scheduler is usually the best choice, and it is the default on most distributions.

Also check if your tests are passing through dm-crypt or any extra layer that might be running single threaded. FreeBSD handles a lot of this work in parallel, so Linux needs a bit of manual tuning to keep up.

You could say FreeBSD out of the box is like a sports car already in sport mode. It gives you raw power with nothing extra stepping in.

Linux out of the box is the same sports car, but it starts in comfort mode with more systems active. More flexibility, options/features, but you also feel a bit less of that straight power until you tune it.