Docker is a platform for packaging and running applications in isolated units called containers. Each container bundles an application together with its libraries and dependencies, sharing the host’s Linux kernel instead of a full separate OS. In practice, containers are much lighter than traditional virtual machines, yet they allow you to deploy services (websites, databases,… continue reading.

Docker is a platform for packaging and running applications in isolated units called containers. Each container bundles an application together with its libraries and dependencies, sharing the host’s Linux kernel instead of a full separate OS. In practice, containers are much lighter than traditional virtual machines, yet they allow you to deploy services (websites, databases,… continue reading.

Nice Article, it doesn’t explain why Docker is better than running those service in a “Linux Jail” or in a VM though. I might try installing it just to play with somethings I’ve been wanting to try, IDK.

Anyways, very well written article.

I haven’t used Docker as yet, but I am in the market for using a couple of containers. I was also doing a comparison between Docker and Podman.

Any thoughts on the differences?

@hydn Great article as always! I enjoy reading what you put together as it is always organized well.

@tmick I do not have much experience with “Linux Jails”, so I wont be able to speak to that, but I use Docker Containers and Docker Compose daily. 90% of the services I run at home are through containers. I have a few VMs still up and running as well.

I personally chose to explore containers more due to an incident I ran into many years ago with my hosted email setup. I use a combination of postfix, dovecot, and opendkim. At the time, I was running them “on bare metal” next to an Icinga2 instance, a Grafana instance, and several other apps.

I was installing updates with apt (sudo apt update && sudo apt upgrade -y), and at the end of the install, postfix was toast. My /etc/postfix directory was mostly empty, and I couldn’t get my email server back up and running (thankfully the actual emails were fine).

I was relatively new to Linux at this time too, so I’m sure plenty of mistakes were made by me that got me into this situation. Maybe I had conflicts in dependencies? Maybe I answered questions from apt wrong? Maybe postfix did an update and I misinterpreted what was happening? Regardless, I decided right then and there, that I didn’t want that situation to happen again.

So why do I use Docker and containers over jails/VMs? Because I wanted to create an environment where one package’s dependencies wouldn’t impact another’s. And I wanted to create a way to quickly “get back” to where I was before a change.

Containers are great for both of those use cases. They are meant to be ephemeral. You can stand them up and blow them away and they should still function the next time you spin it back up. Your config and data lives outside of the container, so you have little risk. I can also easily “transfer” my hosted services to another machine because I can just rsync the configs + data volume to another machine, and spin up a container, and know it will function exactly the same.

Can you do this in a VM? Absolutely! To me, a VM feels too heavy for my use case though. Why spin up an entire operating system and services that requires dedicated high resource allocation by the host when I can get the same with less effort and less resource allocation in a container? Containers are definitely not the end-all-be-all answer, and I’ve definitely seem them used as a hammer when the right tool should’ve been a screwdriver.

I’m guessing you have the experience and knowledge to do something similar with a jail? And I would imagine, based on my limited understanding of jails or chroot, that you could make that function in a very similar way. But for me, containers were the best choice for my use cases. Hopefully my perspective can help answer some of the “why” part of your question!

Your question mostly answered my “why” question. But brings up another one. When you state

They are meant to be ephemeral. You can stand them up and blow them away and they should still function the next time you spin it back up.

Your saying there’s a back-up methodology for them then?

Great question ShyBry747!! ![]() I haven’t used either one so I’m no help there.

I haven’t used either one so I’m no help there.

Absolutely!

The containers themselves are ephemeral. Every time you start up that container, either with the docker run command or from a Dockerfile and the line FROM, you get the exact same result each time. The closest analogy would be like a base image for a VM.

So, if I wanted a basic web server like Apache, I can do docker run httpd or I can put FROM httpd in a Dockerfile, and I can be guaranteed that, on any machine using Docker, I get the same image. So backing up the container itself just isn’t necessary.

Your configs and your data are not ephemeral. They need to persist across container instances and across container hosts:

- Configs: Any file that alters the behavior of the container instance. Examples would be your Dockerfile, the httpd.conf file for a web server, the www.conf file for a php-cgi/php-fpm container, etc.

- Data: Your code you need the application inside the container instance to run, the database and its tables (maybe a .sqlite file or maybe a volume that stores all of Postgres’ or MariaDB’s files)

You mount (read attach) your configs and data to the container instance, that way they persist. And anything you would mount or attach becomes your backup material.

I’m probably over-simplifying some terminology, so someone with more technical knowledge about containers can correct me on the definitions, but hopefully this makes some sense!

@tmick Thanks for reading and for the nudge. This one was a first-step/intro, so I skipped the VM or jail comparison. That deserves its own follow-up.

Short version: Docker lands between a full VM and a chroot or jail. You get clean dependency isolation, reproducible images, and easy portability without running a second OS per service. Compared to jails, you also get a standard workflow, simple networking, and images you can move between hosts. On a single box with multiple services, that convenience adds up fast.

If you spin up Docker, let us know your thoughts.

@shybry747 I need to compare with Podman as well.

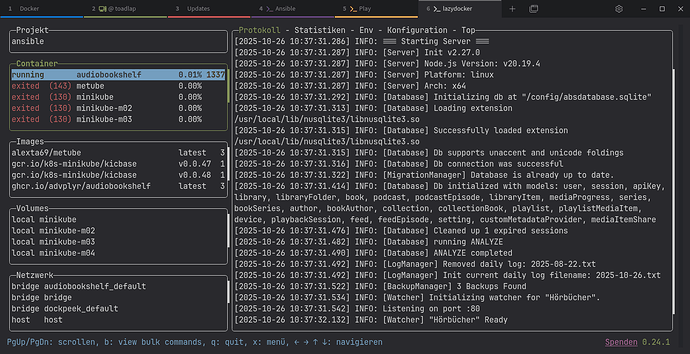

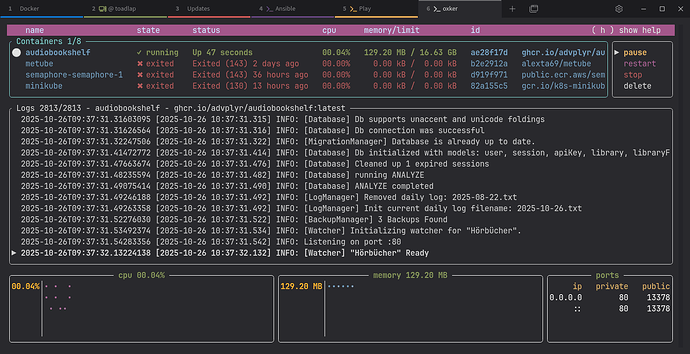

You can use Podman for Docker or Docker Compose or minikube and some other container stuff.

More about the differences: Podman vs Docker: What's the Difference?

This was an excellent read. Since I am on Fedora, I would definitely lean do Podman.

I recently discovered the tool portainer GitHub - portainer/portainer: Making Docker and Kubernetes management easy. which consent to manage all your docker images trough a confortable web interface.

I decided to implement this tool on my workflow and automatically restart the container with Docker-compose, each time the machine is booting up.

Highly suggested.

I don’t see any sense of what the performance and resources costs to using Docker are, here.

The gains are basically being able to run a container just like a normal program (app, whatever) with no hit to the systems performance. Try either Podman or Docker with a container of the app you want to try. Then post your experience details here.