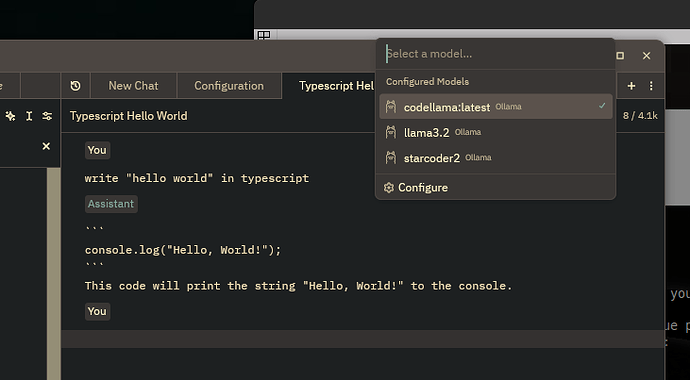

As it’s open source, all the code making network calls is always public. I have min hosted offline and then connect to it via Open WebUI.

I would say at least 32 GB of RAM, 16+ CPU cores and a Nvidia 4060 ti 16GB or AMD rx 7800 xt 16GB or better. But refer to this page.

The two options are Full Model or Quantized Models. Most of us will be running the Quantized Models. But here’s a comparision.

1. Full Model (FP16/FP32)

- Precision: Uses full-precision floating-point numbers (16-bit or 32-bit).

- Memory Usage: Requires a lot of VRAM or RAM—often 20 GB+ for larger models.

- Performance: Provides the highest accuracy and retains all fine-tuned capabilities.

- Inference Speed: Slower (needs powerful HW) compared to quantized models due to higher computation demands.

- Use Case: Best for research, high-end inference, and tasks where precision is crucial.

Pros:

![]() Highest accuracy

Highest accuracy

![]() Retains all model weights and structures

Retains all model weights and structures

![]() Best suited for detailed and complex tasks

Best suited for detailed and complex tasks

Cons:

![]() Very high memory requirements

Very high memory requirements

![]() Requires powerful GPUs or TPUs

Requires powerful GPUs or TPUs

![]() Slower inference speed

Slower inference speed

2. Quantized Models (8-bit, 4-bit, etc.)

- Precision: 16-bit or 32-bit floating point

- Memory: Lots of VRAM or RAM (20+ GB for larger models)

- Performance: Most accurate, keeps all fine-tuned capabilities

- Inference Speed: Slower (needs powerful HW) than quantized models due to higher compute

- Use Case: Research, high-end inference, where precision matters

Pros:

![]() Requires less RAM/VRAM (can run on 8GB GPUs or lower)

Requires less RAM/VRAM (can run on 8GB GPUs or lower)

![]() Faster inference times

Faster inference times

![]() Works well on consumer-grade hardware

Works well on consumer-grade hardware

Cons:

![]() Slight degradation in accuracy (more noticeable in 4-bit or extreme quantization)

Slight degradation in accuracy (more noticeable in 4-bit or extreme quantization)

![]() Some models may lose certain capabilities due to weight compression

Some models may lose certain capabilities due to weight compression

![]() Not ideal for research-level precision

Not ideal for research-level precision

Comparison Table

| Feature | Full Model (FP16/FP32) | Quantized Model (8-bit | 4-bit) |

|---|---|---|---|

| Accuracy | |||

| Memory Usage | |||

| Inference Speed | |||

| Hardware Needs | |||

| Best For | Research, high-detail inference | Consumer hardware, efficient inference |

Which One Should You Use?

- If you have a high end GPU with lots of VRAM, use full models

- For consumer GPUs or just need fast responses? Use Quantized Models

- Fine-tuning? full models. (but quantized might work depending on framework)

If offline, it’s self-sustained. These models do not require internet connection to run.

If you need to monitor your GPU performance. Check out this article/list: